With the implementation of my Proxmox server it's now time to play with some new applications - and we'll start with Immich, a replacement for Google Photos. What is Immich according to their website:

Now a quick note of care - Immich's website states that you should not use this as the only way to store your photos and videos - it's under *active* development and does occasionally break stuff. So with that in mind, let's get into it and have a play.

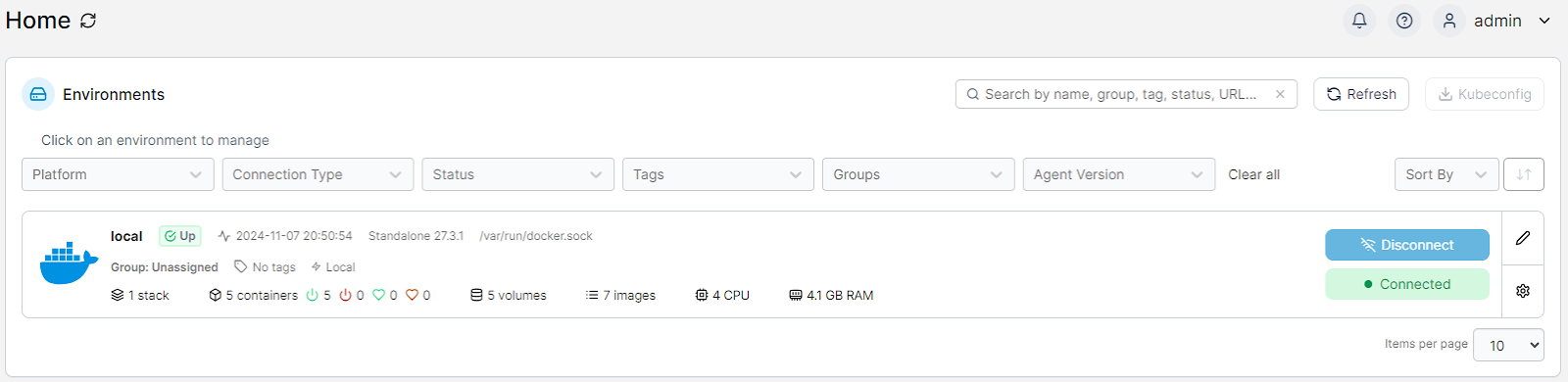

To implement Immich in my environment I definitely had some options to explore. I could install it using Docker, the All-In-One Community installation, TrueNAS (oh I don't have that yet) or Unraid (dang I don't have that either). I decided to go with the Portainer installation - that would give me a nice interface via the Portainer container management, and some stats and stuff later on. Installing Portainer means that I can't use a Proxmox container - it's not recommended, and I was keen to not screw this up. So here's the quick and nasty installation activity:

- Create the VM in Proxmox

- 4 vCPU

- 4GB of RAM (I really should have gone 8GB and now I'm updating it)

- 500GB of disk space on two brand new Corsair SSD's (both 1TB for plenty of headroom)

- Install Ubuntu 24.04LTS Server

- Install Docker from here: https://docs.docker.com/engine/install/ubuntu/

- Install Portainer following the instructions from here: https://docs.portainer.io/start/install-ce/server/docker/linux

lvextend -l +100%FREE -r /dev/mapper/ubuntu--vg-ubuntu--lv

Right, now we'll get onto the installation of Immich and get into it here: https://immich.app/docs/install/portainer

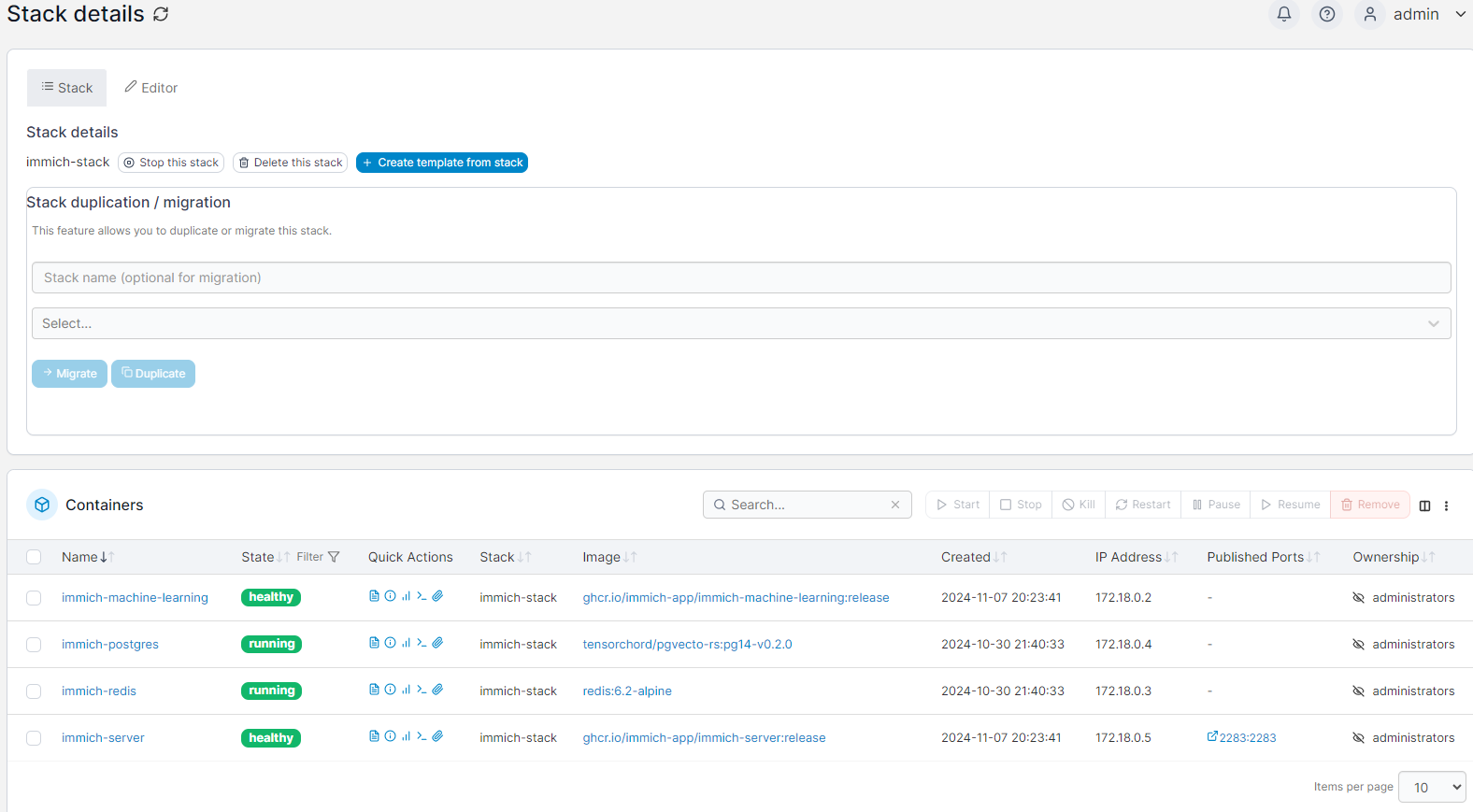

The long and short is, set up a Stack so that all the Immich bits and pieces sit under it and then off we go. The guide is quite good so follow it and there are plenty of resources. The stack ends up looking like this:

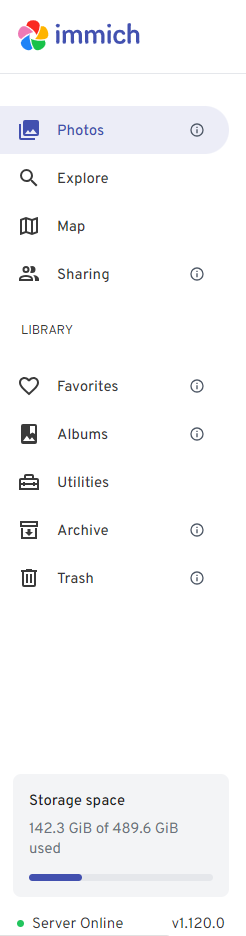

The menu is nice, easy to manage and gives you all the stats. I'm still building this system out, but here's a few things I've found:

- uploading photos to the system was easy - via the file upload, and you can do bulk but not folders

- uploading photos is VERY CPU intensive - I had the 4vCPU's maxed out during the upload and for a while afterwards as the system processed - it was cracking along and the server's fans were making a real racket.

- it's much better to do the upload via ethernet than Wi-Fi - it's quicker (on my network anyway)